Docker Software Basics

Docker is a software platform for building applications in small and lightweight execution environments called containers, which are isolated from other processes, operating system resources, and kernel. Containers are assigned resources that no other process can access, and they cannot access any resources not explicitly assigned to them. The concept of containerization has been around for some time, but it was not until the launch of Docker, an open-source project released in 2013, that usage became widespread. Originally built for Linux OS, Docker became a multiplatform solution and catalyzed the microservices-oriented approach in development.

Difference from Virtualization

Despite its similarities to Virtual Machines, such as, resource isolation and allocation, which allow Docker Containers to be copied to any place on any operating system without losing their functionality – Docker Containers are not the same as Virtual Machines.

First of all, Docker is a tool for data processing and is not meant for data storage (for data storage you will have to use mounted volumes). Every Docker container has a top read/write layer, and once a Container is terminated – both the layer and its content are erased. Also, Docker Images use a different approach to virtualization and contain a minimal set of everything needed to run an application: code, runtime, system tools, system libraries, and settings. Because Containers do not have a common OS, they take up less space than the VMs and boot much faster.

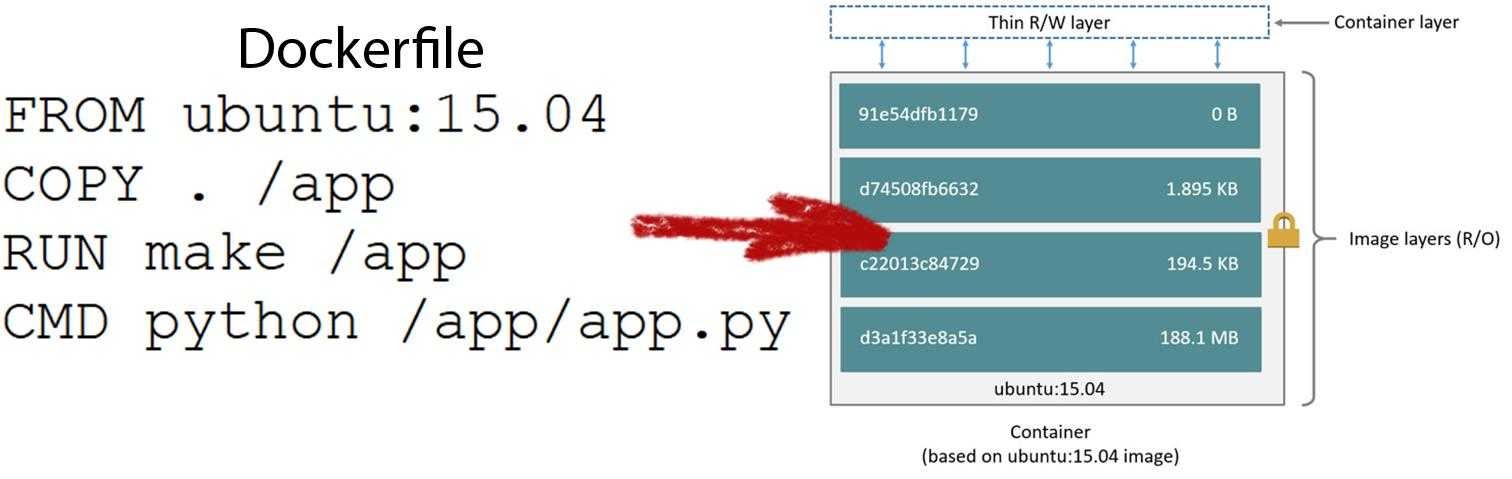

Images and Containers

(Source: docs.docker.com)

A Docker Image is a set of layers where each layer represents an instruction from the Dockerfile. The layers are stacked on top of each other. Each new layer is only a set of differences from the previous one.

When you run the command docker images, you get a list containing the names and properties of all locally present Images.

REPOSITORY TAG IMAGE ID CREATED SIZE

ubuntu latest 3556258649b2 10 days ago 64.2MB

The key fields of the list are:

Repository – the name of the repository where you pulled the Image from. If you plan to push the Image, this name must be unique. Otherwise, you can store the Image locally and name it however you want.

Tag – used to convey information about the specific version of the Image. It is an alias for the Image ID. If no tag is specified, then Docker automatically uses the latest tag as a default.

ImageID– locally generated and assigned Image identification.

A Docker Container is a running instance of the Docker Image. When you create a new Docker Container, you add a new writable layer on top of the underlying layers. This layer is often called the container layer. All changes made to the running container such as writing new files and modifying and deleting existing ones are added to this thin writable container layer. You can run multiple Containers out of one Image.

To list running Containers use docker ps, and docker ps -a for all the Containers, including the terminated ones.

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

626b43558b2 ubuntu "/bin/bash" 6 minutes ago Up 10 seconds xenodochial_leakey

Where the most important fields for a Container are:

Container ID – is a unique locally assigned name for the identification of the Docker Container.

Names – human-readable format of the Docker Container identification.

Running Containers

A Container is a process that runs on a local or a remote host. To run a Container use: docker run. With the run command, a developer can specify or override image defaults related to:

- detached -d or foreground running

- container identification --name

- network settings

- runtime constraints on CPU and memory

You can restore stopped Containers with docker start.

Processes

Now, let’s look into the Docker Container. Directories in the Container are organized by the File Hierarchy Standard, which defines the directory structure and directory contents in Linux distributions. Containers only exist while the main process inside is running. In the Docker Container, the main process always has the PID=1. You can start as many secondary processes as your Container can handle, but as soon as you stop the main process it will terminate the entire Container.

Registry

The place to store and exchange Images is called Docker Registry. The most popular registry is the Docker Hub. Before you Docker any service, it is better to visit the Hub first and see if someone has already done this for you.

The registry is a centralized platform for storage and exchange of Images for developers and computers with a single command. Note that Registry does not store running Containers, but only the Docker Images.

To download an Image from the Hub: docker pull [image name]

To upload an Image to the Hub: docker push [image name]

Also, there is a good feature of the Docker Hub for learners, which is a presentation of the Dockerfile with the use of which you can learn how to create your own Image.

Dockerfile

Before writing a Dockerfile it is better to first learn the basic instructions. Usually, a Dockerfile consists of lines like this:

## Comment

INSTRUCTION arguments

It is not case-sensitive, but the conversion of the instructions to upper-case makes them easily distinguishable from the arguments. Take a look at the following Dockerfile example:

FROM ubuntu:18.04

COPY . /app

RUN make /app

CMD python /app/app.py

FROM

FROM – the only mandatory instruction, which initializes a new build and sets the Base Image for following instructions. The specified Docker Image will be pulled from the Docker Hub registry if it does not exist locally.

Example:

FROM debian:cosmic – pulls and sets the debian image with the tag cosmic

COPY

The COPY instruction copies files and directories from the base OS and adds them to the filesystem of the container.

Example:

COPY /project /usr/share/project

Note that the absolute path refers to an absolute path within the build context, not an absolute path on the host. If COPY /users/home/student/project /usr/share/project is used then the dockerfile will produce an error that /users/home/student/project does not exist because there is no subdirectory users/home/student/project.

RUN

The RUN instruction executes any commands in a new layer on top of the current image.

CMD

The main purpose of CMD is to provide default values for an executing container. There can only be one CMD instruction in a Dockerfile. If you list more than one CMD then the last CMD will take effect.

You can learn more instructions from Docker Docs.

Docker Networking

Docker is an excellent tool for managing and deploying microservices. Microservice architecture is increasingly popular for building large-scale applications. Rather than using a single codebase, applications are broken down into a collection of smaller microservices. One of the reasons Docker containers and services are so powerful is that you can connect them together.

Docker’s networking subsystem is pluggable, using drivers. Several drivers exist by default, and provide core networking functionality:

bridge: The default network driver, usually used when your applications run in standalone containers that need to communicate.

host: The host network mode is for standalone containers, which removes the network isolation between the container and the Docker host, and uses the host’s networking directly.

overlay: The overlay network driver connects multiple Docker daemons together and enables swarm services to communicate with each other.

macvlan: The macvlan driver allows you to assign a MAC address to a container, making it appear as a physical device on your network.

none: The none flag disables all networking for the specified container.

Create networks

To create the network, you do not need the --driver bridge flag since it’s the default, but this example shows how to specify it. When you create a network, Docker creates a non-overlapping subnetwork for the network by default. You can override this default and specify subnetwork values directly using the --subnet option, additionally, you also specify the --gateway. On a bridge network, you can only create a single subnet.

$ docker network create --driver bridge --subnet [subnet] --gateway [gateway] [network name]

To connect containers to the network, you will need the --network flags. On user-defined networks, you can also choose the IP addresses for the containers with --ip and --ip6 flags when you start the container. You also can bind a container’s ports to a specific port using the -p flag. You can only connect to one network during the docker run command, so you need to use docker network connect afterward to connect to the other networks as well.

$ docker run -dit --name [container name] --network [network name] --ip [ip] -p [host:docker] [image name]

$ docker network connect [network name] [container name]

Conclusion

So Docker makes it really easy and straightforward for you to install, test and run software on almost any given computer, such as a desktop, a web server or any cloud-based platform. Also mentionable is the way Docker approaches the development of a single application as a suite of microservices – small services, each running in their own process and communicating with each other by using lightweight mechanisms. So if you are developing multiple applications and want them to interconnect, then Docker would be a good option for you. A repository for free public Docker images and comprehensive documentation will make it easier for beginners.

Test your skills with new RangeForce modules.

Written by Mykyta Zaitsev, Security Engineer